MITRE ATT&CK

What is MITRE ATT&CK™?

MITRE introduced ATT&CK (Adversarial Tactics, Techniques & Common Knowledge) in 2013 as a way to describe and categorize adversarial behaviors based on real-world observations. ATT&CK is a structured list of known attacker behaviors that have been compiled into tactics and techniques and expressed in a handful of matrices as well as via STIX/TAXII. Since this list is a fairly comprehensive representation of behaviors attackers employ when compromising networks, it is useful for a variety of offensive and defensive measurements, representations, and other mechanisms.

Understanding ATT&CK Matrices

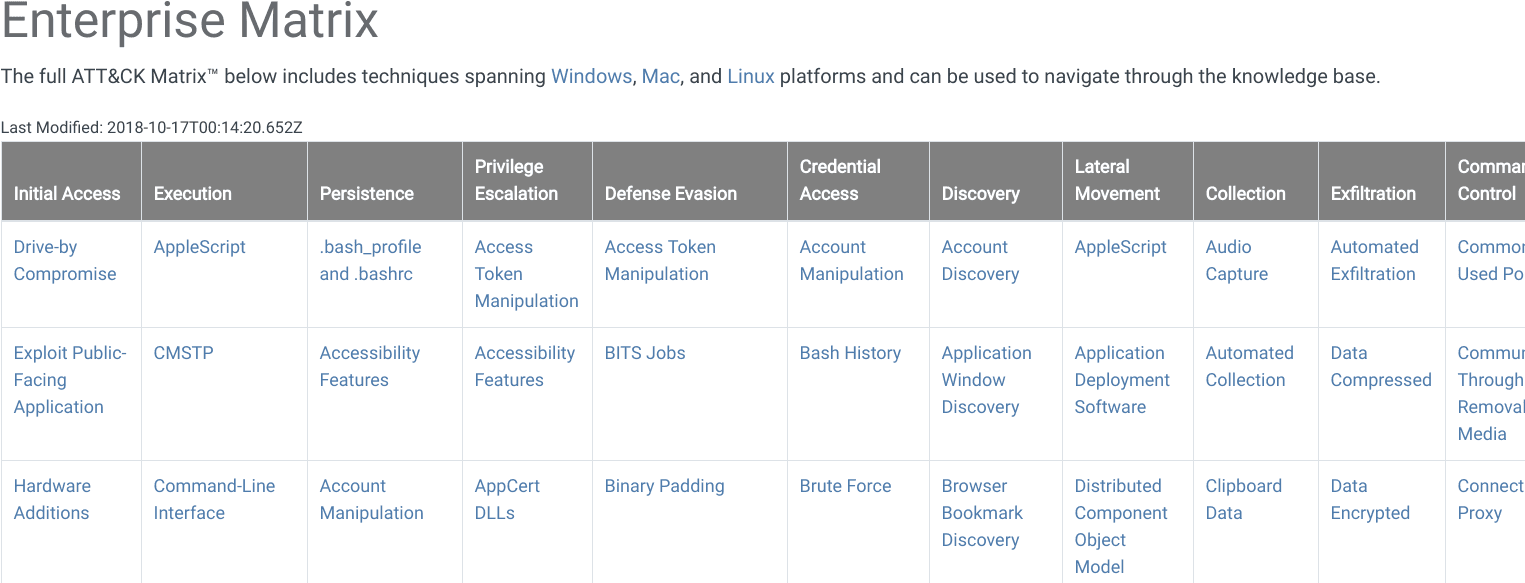

MITRE has ATT&CK broken out into a few different matrices: Enterprise, Mobile, and PRE-ATT&CK. Each of these matrices contains various tactics and techniques associated with that matrix’s subject matter.

The Enterprise matrix is made of techniques and tactics that apply to Windows, Linux, and/or MacOS systems. Mobile contains tactics and techniques that apply to mobile devices. PRE-ATT&CK contains tactics and techniques related to what attackers do before they try to exploit a particular target network or system.

The Nuts and Bolts of ATT&CK: Tactics and Techniques

When looking at ATT&CK in the form of a matrix, the column titles across the top are tactics and are essentially categories of techniques. Tactics are the what attackers are trying to achieve whereas the individual techniques are the how they accomplish those steps or goals.

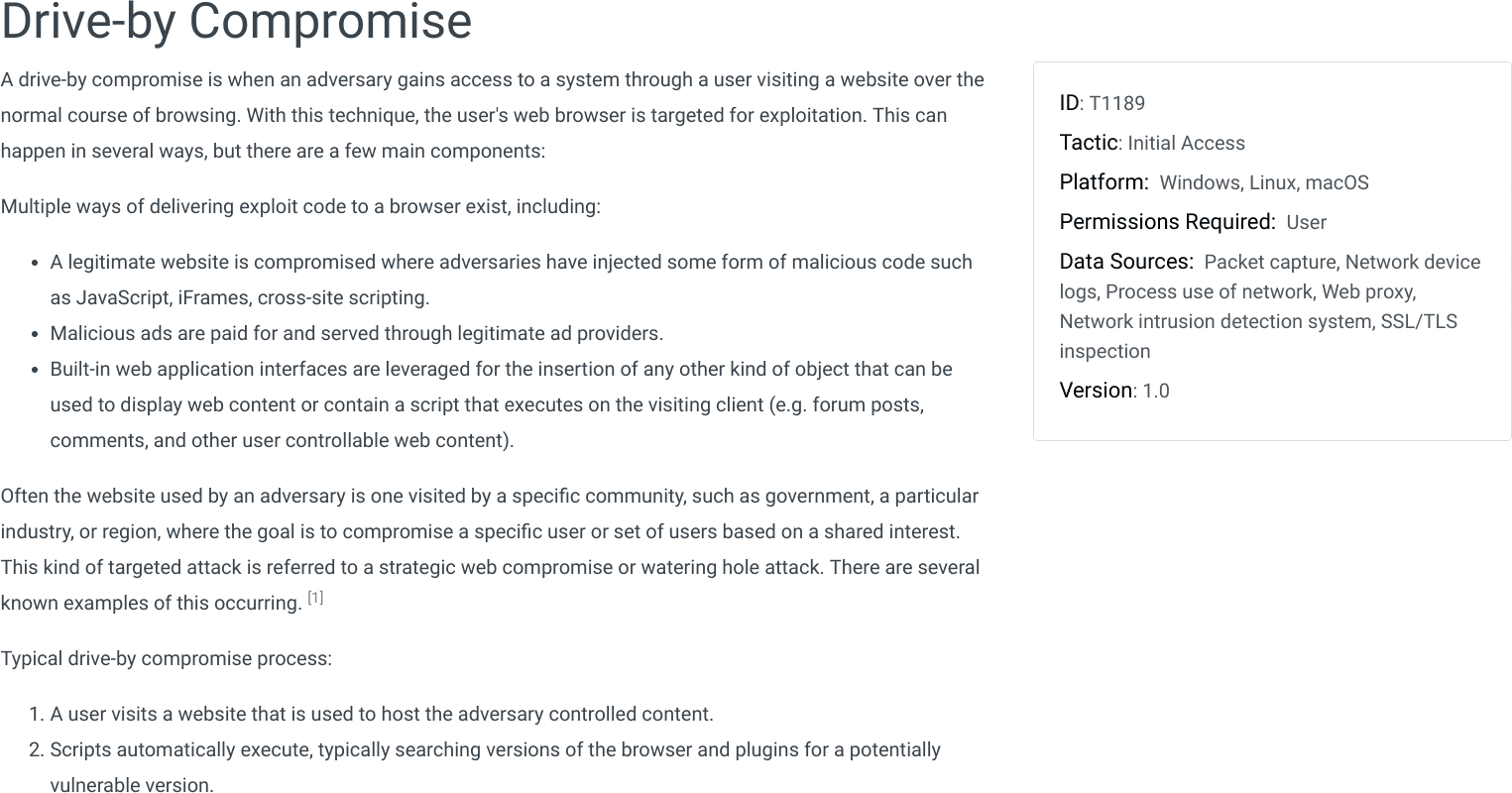

For example, one of the tactics is Lateral Movement. In order for an attacker to successfully achieve lateral movement in a network, they will want to employ one or more of the techniques listed in the Lateral Movement column in the ATT&CK matrix.A technique is a specific behavior to achieve a goal and is often a single step in a string of activities employed to complete the attacker’s overall mission. ATT&CK provides many details about each technique including a description, examples, references, and suggestions for mitigation and detection.

As an example of how tactics and techniques work in ATT&CK, an attacker may wish to gain access into a network and install cryptocurrency mining software on as many systems as possible inside that network. In order to accomplish this overall goal, the attacker needs to successfully perform several intermediate steps. First, gain access to the network – possibly through a Spearphishing Link. Next, they may need to escalate privilege through Process Injection. Now they can get other credentials from the system through Credential Dumping and then establish persistence by setting the mining script to run as a Scheduled Task. With this accomplished, the attacker may be able to move laterally across the network with Pass the Hash and spread their coin miner software on as many systems as possible.

In this example, the attacker had to successfully execute five steps – each representing a specific tactic or stage of their overall attack: Initial Access, Privilege Escalation, Credential Access, Persistence, and Lateral Movement. They used specific techniques within these tactics to accomplish each stage of their attack (spearphishing link, process injection, credential dumping, etc.).

The Differences Between PRE-ATT&CK and ATT&CK Enterprise

PRE-ATT&CK and ATT&CK Enterprise combine to form the full list of tactics that happen to roughly align with the Cyber Kill Chain. PRE-ATT&CK mostly aligns with the first three phases of the kill chain: reconnaissance, weaponization, and delivery. ATT&CK Enterprise aligns well with the final four phases of the kill chain: exploitation, installation, command & control, and actions on objectives.